AI Can’t Say No

By Raymond Brigleb

One of the things I notice most quickly when working with large language models is how bad their judgment is.

I don’t mean they get facts wrong, though they do. I mean something more specific: when faced with a choice, they almost always make the wrong call.

Here’s how it typically goes. I’ll ask Claude Code to evaluate a space for me—maybe a set of command-line tools for something I’m trying to accomplish. It will research the options, gather details, and eventually present a summary. “Here’s all the choices, and here’s the one I would use.” It always does this; it’s probably trained to.

But here’s the thing: I almost never agree. If it’s a multiple choice, I’m nearly guaranteed to pick something different. It’s not that I’m contrarian—it’s that the model seems to lack whatever faculty makes one option obviously better than another. It can enumerate trade-offs. It just can’t weigh them.

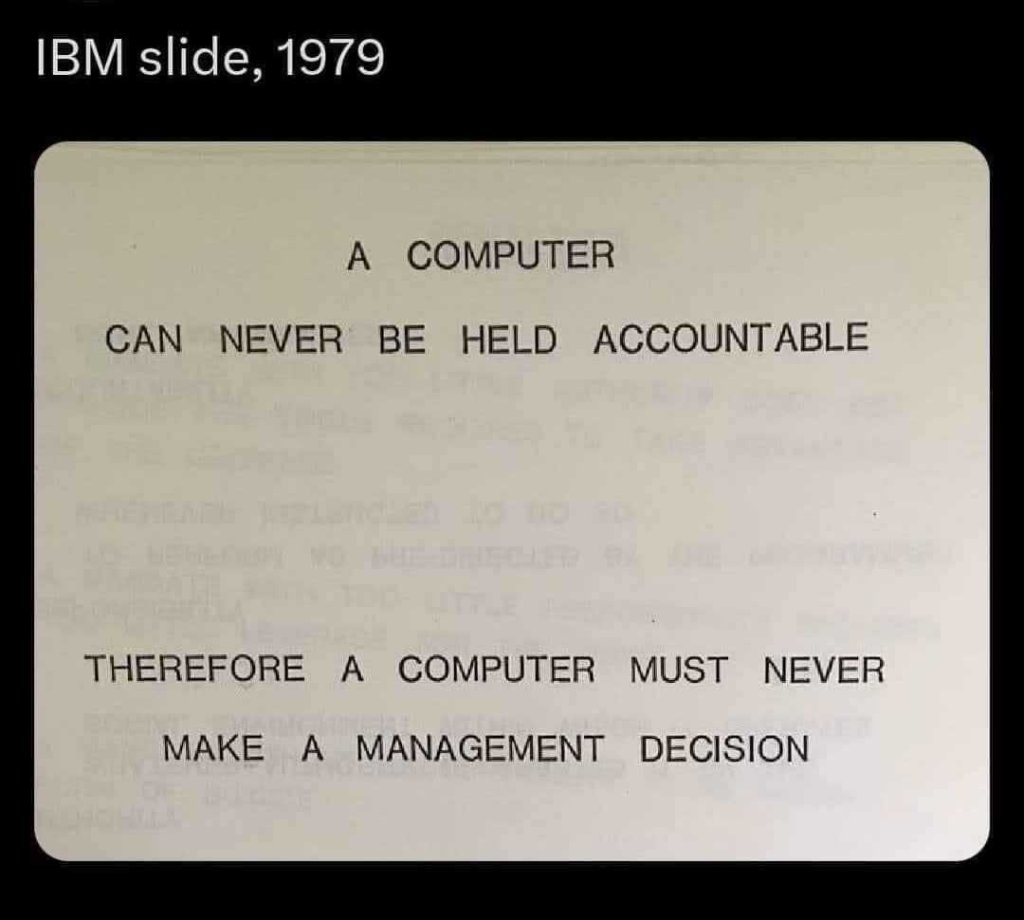

I suspect this is deeply related to how these models are trained. They’re given tasks and rewarded for completing them. They’re goal-oriented in the most literal sense: they want to finish, to produce an output, to give you something. When presented with a choice, they pick whatever gets them to done.

But good judgment often means not doing something. Saying no. Recognizing that the elegant solution isn’t worth the complexity it introduces, or that the popular tool has a design philosophy that’s going to fight you in six months.

Apple famously “says no a thousand times” to protect the products they ship. Working with agentic AI requires the same discipline—except you’re the one who has to say no, over and over, because the model won’t.

I’m surprised how often I write what I think is a clear description of a task, only to discover partway through that Claude made a quietly bad decision early on that I now have to unwind. You get better at catching these, but it feels like a parlor trick—specialized knowledge about how to work around a tool’s limitations rather than knowledge about the actual work.

If you talk to these models like you’re talking to a capable colleague, you’re going to end up with their judgment baked into your projects. And their judgment, right now, isn’t very good.